Translate this page into:

The peer review process: Yesterday, today and tomorrow

Correspondence Address:

Saumya Panda

Belle Vue Clinic, Kolkata, West Bengal

India

| How to cite this article: Panda S. The peer review process: Yesterday, today and tomorrow. Indian J Dermatol Venereol Leprol 2019;85:239-245 |

Scholarly peer review is the process by which research articles are appraised by editors and referees before being accepted for publication or being rejected in an academic journal. It is a system in which the research community is embedded as a quality filter. It is an integral part of the process by which we ensure two critical domains of quality in research and publishing: integrity and ethics. By integrity, we allude to reliability, replicability, trustworthiness, impact and utility of published research. The objectives of peer review in this domain are ensuring that the research is technically sound and that errors are identified and corrected, that the research process is fully evidenced and that the data are properly presented and analyzed. By ethics we refer to the regulated ethical requirements for doing research (human and animal research, in particular), as well as equally important community-driven obligations (such as authorship and publication practices), how these are represented in a specific research publication and ensuring that any evidence of malpractice is acted upon.[1]

As a part of scientific practice, peer review, as we know it, is less than a century old. It is easy to forget how young or old the concept of peer review is, or that its execution has been in a state of near-constant flux since its inception.[2] Therefore, it is a worthwhile exercise to explore its origins as we try to understand the calls to reform peer review.

The Origins

The earliest mention of a process similar to peer review dates back to the ninth century in Syria. In the Adab al-Tabib, a scholarly tome on medical ethics based on Hippocrates and Galen, its author Ishāq bin Ali al-Ruhawi asserts that physicians should keep detailed records of the prescribed course of care and that these records ought to be subject to review by a local council of physicians who would determine whether the treatment prescribed was appropriate.[2]

Scholarly peer review is commonly conjectured to have originated soon after the formation of the Royal Society of London in 1660. The Philosophical Transactions, compiled and edited solely by the society's Secretary, Henry Oldenburg, was conceived as an open notebook that academics could contribute to. Whether a contribution would be accepted for publication was a decision left solely to the editor. While it may have been the case that Oldenburg informally sought the help of experts in judging whether a scientific work was worthy of publication, this was as far removed as anything from peer review as it is understood today.[2] However, the point we want to make here is that scholarly communication along with peer review, in some form or other, is an intrinsic part of the scientific method and has been there ever since science in its recognizable form emerged.[3] Peer review or certification to establish the validity of the findings is one of the key functions of scientific publication, identified by Henry Oldenburg and Robert Boyle, that have characterized it since the seventeenth century,[4] as noted by Robert Merton three centuries later.[5] It is one of the pillars of what Merton calls “organized skepticism.”

Almost a century later, the Royal Society would assume editorial responsibility for its journal and adopt a system of review more akin to what we have today. It constituted a “Committee of Papers,” a select group that would decide which papers would be included in the Philosophical Transactions. However, it is important to note that an important component of peer review—the referee's report—was conspicuous by its absence at this point of time.[2]

It was William Whewell in 1831, who boldly suggested that teams of eminent scientists (a term he invented) might compile reports based on the submissions to Philosophical Transactions, that were intended for publication in a new, cheaper monthly periodical called the Proceedings of the Royal Society. He surmised that this might serve to publicize the scientific work done by the society's fellows and so increase the public's appreciation and understanding of science. This well-intentioned experiment—far ahead of its time—bit the dust in 2 years: any negative reports that may have been written were never published.[2]

After the Second World War, academic journals slowly adopted the format that is most prevalent today: single-blind (or reviewer-anonymous) peer review. In this scheme of things, journals are run by a team of editors, who in turn maintain a network of colleagues who, upon request, will write reviews of scientific articles sent to them. The editors then decide whether to accept, send back to the author for revision, or reject a paper based on the reviewers' recommendations.[2]

The Current System of Peer Review: Pros and Cons

The blinded peer review system has held sway for the past 75 years with some modifications. For example, some journals (similar to ours) have adopted double-blind peer review, which sends the referee a copy of the paper with the names and institutional affiliations of the authors redacted: this is done to correct for possible biases. Although there is evidence that it does work,[6] critics claim that it does not effectively anonymize the author,[7] especially in smaller communities of researchers.

Ironically, the capacity to anonymize has been further compromised with modern attempts to obtain unbiased evidence. For example, practically speaking, no randomized controlled trial may be effectively anonymized any more, thanks to the prerequisite of preregistration of the trials in clinical trial registries that has become the standard norm for journals worldwide (including IJDVL). With the registry details to be mentioned mandatorily in any trial report, it takes an interested reviewer just a few clicks to have a fair idea of the author details including institutional affiliations.

The major criticism against single-blinded review is that it leaves the door ajar for subjective bias of all kinds (personal, or based upon gender, race, institution, geographical location, country of origin, etc.). On the contrary, the critics against the double-blind system would sometimes refer to the obverse—the difficulty to arrive at a decision objectively in the absence of the redacted details.[7]

Such uncertainties have led to a situation where researchers do not express a clear preference for single- or double-blinded review any more in a survey, both as authors and as reviewers, when asked which type of review makes them more or less likely to submit to, or review for, a journal compared to the situation a decade back, when the preference was very much in favor of double-blind review.[8] However, at an individual level, the relative preferences are expressed more unequivocally. As an editor, I have encountered at least one reviewer, who is otherwise very active internationally, refusing to review because he is not comfortable in the double-blind system. For him, the nondisclosure of the authors' identity and institutional affiliations is an impediment to a complete appraisal. At the other extreme, a very senior reviewer recently refused to review an article in this journal (the first such instance, according to him, in 17 years!) as the authors' identities had been unblinded accidentally in the article file sent to him. Though both these examples are extreme, and most authors and reviewers are comfortable with either form of blinding, still there is a significant subset that is willing to participate in only one of these systems.

Is blinding overrated? Let's face it. The system operates on trust. It is not possible to ensure objectivity in absolute terms as we do not even have an operational definition of peer review. We all know, however, what peer review is, in abstract terms, just as we know what poetry, love or justice is (to paraphrase Richard Smith).[9] As we have seen, in a climate of enormous trust deficit, no degree of blinding may be deemed sufficient. Even in a double-blind system, the editors may be biased as they are aware of the authors' identity. So, how to get rid of that? By devising a triple-blind system, where the authors are identified by editors only as numbers?[10] Even this will not work if the authors are close to the editors or if they communicate with the latter. The answer to that will perhaps be a quadruple blind review, where the name of the editor itself will be a secret. This will also not rule out bias altogether, as readers might cite works only of the reputed scholars. If you want to avoid that, you have to opt for quintuple-blinded review, where the authors' names are hidden, for a certain period, at least. One can opt for a still higher level of blinding—sextuple blinding—where even the journal name is blinded, and the reader would know nothing about the authors, editors, reviewers or the journal. Through an elaborate joke, Fabio Rojas makes us see that trying to rule out bias altogether will lead us to ludicrous lows, giving rise to an Alice in wonderland kind of situation.[10]

However, the survey referred to earlier reported that peer review remains broadly supported: 82% agreed with the statement “without peer review there is no control in scientific communication,” unchanged from the 83% response in 2007 and 2009.[8] Researchers continued to value the benefits of peer review, with 74% agreeing that it improves the quality of the published paper, very similar to 2009 (77%).[8] To underscore the value of peer review, a recent BMJ editorial has come down hard on the increasingly common trend of publicizing trial results before peer review and has warned that “clinicians should remain sceptical until peer reviewed findings are published in full.”[11] The immediate provocation was a press release hailing oral semaglutide, an analog of glucagon-like peptide 1, for a “significant reduction in cardiovascular death and all-cause mortality.” This was before the study was published in a peer-reviewed journal. The truth was that the report was based on a noninferiority placebo-controlled trial of semaglutide, meaning that semaglutide was not inferior, but neither superior, to placebo for the prespecified primary endpoint: a composite outcome of cardiovascular death, nonfatal myocardial infarction or nonfatal stroke.[11]

The reiteration of utility of peer reviews has become necessary in the wake of the recent fashion of running down the system in a concerted manner. A very recent example is a Medscape article with a screaming headline: “To maintain trust in science, lose the peer review.”[12] It worked—the article got huge attention, all the more for its scandalous tone. It was a hatchet job on the peer review system from the word go. And the authors left no stone unturned to achieve their objective. For example, they ran down the scientific publishing system by citing the shenanigans of the predatory journals, admitting in the same breath that these journals often bypass peer review! The authors display a strangely narrow view regarding the working of peer reviews in the real world, like, they have not supposedly heard of a thing called multidisciplinary peer review that is par for course even in specialty journals, like, say, ours. One of their equally original observations is that once published, a study can lead to a cascade of mainstream media reports. The problem is, these days, under intense commercial pressure, this is happening even much before peer-reviewed data are published.[8] Anyway, the really breathtaking part of the whole article is the solution it proffers—peer reviewers from the worlds of journalism and public policy have to be engaged in order to improve “translation of science”![12] The frivolous article, having no scientific pretensions, could well have been ignored, if not for the very important question it raises: Why have the authors made a pitch for reorienting scientific literature toward a journalistic approach—in order to make it easier to percolate fake news in biomedicine? Anyway, it is a recipe for disaster.

Commercial pressures are telling upon the publishing industry and the traditional publication process in a lot of ways. The pharma and the medical devices industries are finding the social media as useful allies, particularly as advances in social media technologies far surpass government regulations and do not care much for established norms of ethics in scholarly communications. One example is medical promotion as the latest Instagram influencer, as the industry partners with influencers to sell new drugs and devices and to build trust online.[13] We have to objectively assess any criticism of the peer review process, making a note of these facts. The more peer review is denigrated, the more acceptable and “prestigious” these practices have become.

However, it cannot be anyone's case that everything is hunky dory in the peer review world. Richard Smith, a distinguished ex-editor of BMJ, brought the inconsistencies and defects of the peer review system in focus (consumption of time, skill and resources, failure to completely resolve bias, publication bias, qualitative discordance, scope for dishonesty and abuse).[9] After dissecting the inadequacies in the modifications of standard peer review (double blinding, open review), Smith concluded that peer review would continue to remain central in scientific publishing because of the TINA ('There Is No Alternative') effect, not because of its efficacy.

The major problem of objective assessment of the effectiveness of peer reviews is the stark absence of evidence. This deficiency of empirical evidence was documented by a Cochrane systematic review of 28 studies when it concluded that “little empirical evidence is available to support the use of editorial peer review as a mechanism to ensure quality of biomedical research.”[14] Though the review took pains to emphasize that “the absence of evidence on efficacy and effectiveness cannot be interpreted as evidence of their absence,” the detractors of peer review system have misread it all the same, wilfully or otherwise.[12]

So the overt negativity against the peer review process is perhaps uncalled for. But, does it represent some fundamental crisis in scientific publishing? A 10-year-old data tells us that in a given year there were 1.4 million articles published in peer-reviewed journals—one in every 22 seconds, while a typical peer review would take 2–4 hours.[15] The industry average of time taken per paper for review was 80 days.[3] The gulf between the sheer volume of submitted articles and the reviewer pool has only widened in the meantime. On the one hand, it has put further pressure on a limited pool of reviewers, arguably leading to qualitative decline of the review process, and on the other, a burgeoning gap will lead to collapse of the peer review system as we know it. The reports of various surveys have led the alarm bells to start ringing.[8] I am not sure that the widespread negativity regarding the peer review system as a whole without carefully evaluating and interpreting the evidence regarding the same, akin to throwing away the baby with the bath water, is not a sign of desperation for a system seeking to short circuit itself simply because it finds the current paradigm unsustainable.

Other than failing in the task of ensuring absolute objectivity (an ideal objective that is perhaps not pragmatic), what are the more flagrant shortcomings of the editorial peer review system that have become evident in recent times? That all is not well may be understood from the very fact that an editor of a top-notch medical specialty journal had to resign very recently following retraction of an article containing racist characterizations.[16] In a system working with a reasonable degree of efficiency, bias of such a gross degree and quality could never take place.

In a very recent retrospective analysis of prospectively collected 4-year data (2013–2016), a substantial minority of top-tier US-based physician-editors of highly cited medical journals were found to receive payments from the industry within any given year, sometimes quite large.[17] This does not certainly help in enhancing the public opinion in favor of peer review.

Adding to this is the increasing occurrence of rogue peer reviewers. Who are they? The rogue activities can be of several kinds:[18]

- Someone who pretends to be a peer reviewer but is actually not;

- Someone who purposely delays the process by raising false objections or recommends outright rejection of a paper to roadblock its publication so that her/his work on a similar topic can be published first or the publication of the rival work may be stymied forever, or in other words, someone who intentionally sabotages the work being reviewed; or

- Someone who will not review unless (s)he can publish the article publicly online, e.g. in her/his blog; or

- Someone who purposefully uses the peer review system to benefit oneself—utilizing manuscripts sent for reviews and presenting these or some parts as their own, not disclosing conflicts, etc.; or

- Someone who recommends a paper to be rejected unless it cites the reviewer's work;[19] or

- Someone who writes reviews with no constructive content and gives recommendations to accept/reject without assigning any reason or without pointing out the shortcomings; or

- Someone who engages junior colleagues or students to do the reviewing on one's behalf.

As an effect of the burgeoning research literature, it is getting increasingly contaminated by incorrect research results, not only unconscious bias and poor research methods but intentional fraud. Peer review has been found to be rather ineffective in combating organized deception. An example has been provided by molecular oncologist Jennifer Byrne, who, in 2015, stumbled upon a clutch of papers produced from China based on a gene first reported by her and co-workers in 1998.[20] All were by different authors based in China, but the articles had shared and peculiar irregularities. The articles had a high degree of resemblance in language and figures. Byrne surmised that the papers came from third parties working for profit. Most of the protein-coding and nonprotein-coding genes in the human genome being currently understudied, such third parties are targeting less well-known human genes to produce low-value and possibly fraudulent papers, as she hypothesizes. If genes are understudied, reviewers are unlikely to have the expertise needed to spot problems, and that is how the articles are giving the peer review system a slip. If an organized fraud ring operates, manuscripts could be distributed to different author groups, and submitted, over similar time periods, across many journals to avoid detection. Byrne and Cyril Labbe have developed a tool, Seek and Blastn (go.nature.com/2hsk06q), to identify papers on the basis of wrongly identified nucleotide sequences.[21]

In a way, many of the inherent problems of peer review stem from the system having its role in two different domains: besides examining the scientific rigor of the work, peer review also assesses the scholarly importance of the submission. From the vantage point of journals competing for readership, scholarly importance becomes synonymous with citability. This is a major side-effect of measuring journal prestige through citation indices in order to evaluate research.[22] Only a few journals such as PLoS ONE have effectively delinked the review process from judging the importance of the work and have left it to the readers after publication. In this scheme of things, the reviewers are asked by the editors whether the science is rigorous, ethical, properly reported and the conclusions are backed by data but are not asked if it is important. Perhaps more journals should emulate the same.

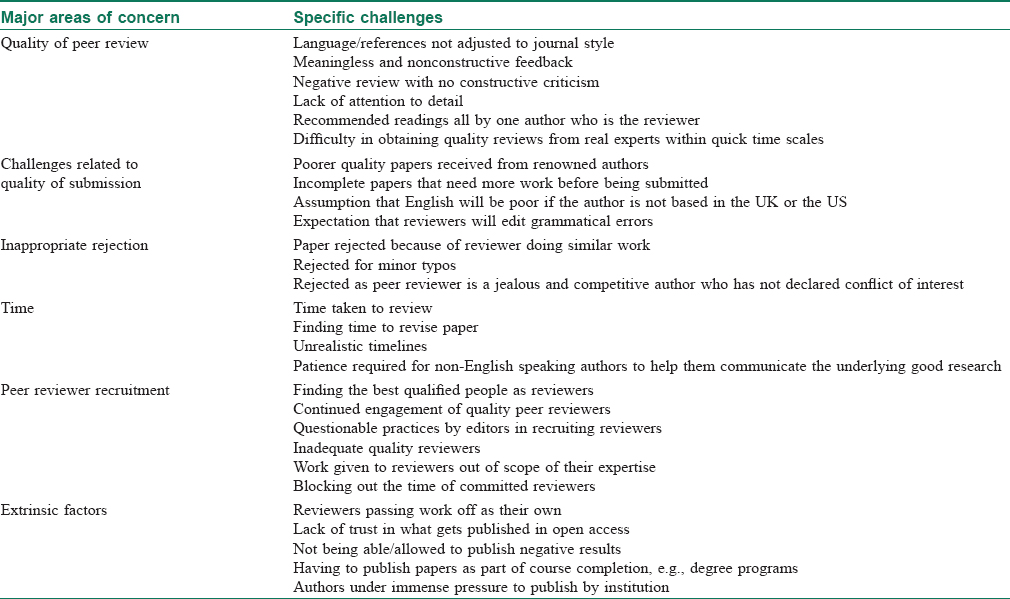

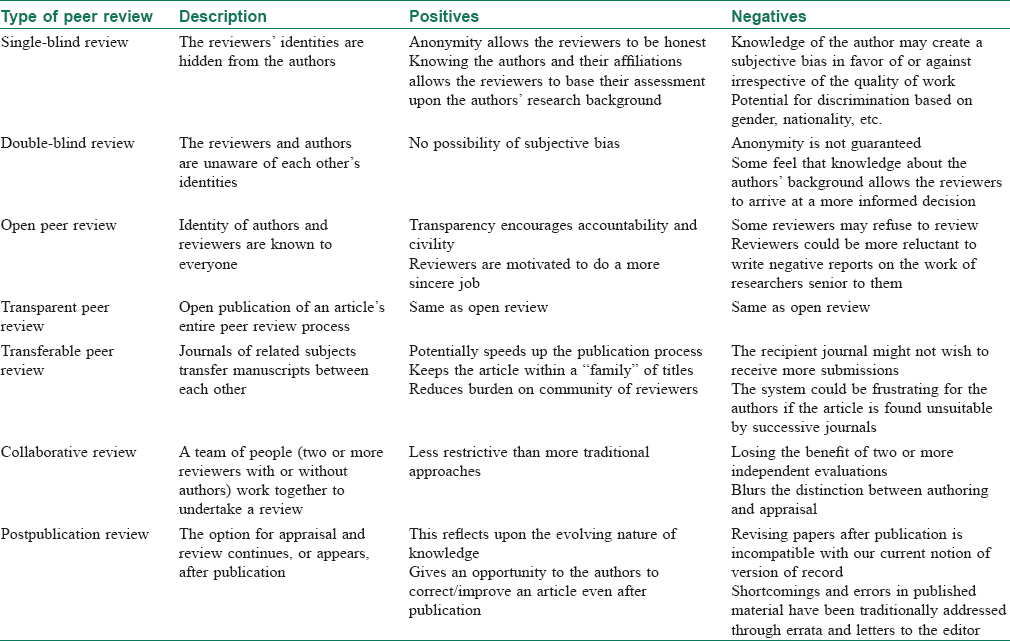

[Table - 1] summarizes the challenges encountered by the peer review-based publishing system.[18] [Table - 2] presents the pros and cons of various kinds of peer review that have been tried out in practice until now.[23]

Back to the Future

From the background given above, it is apparent that it is not peer review per se, but its abuse or inefficient or incorrect use that is at the root of most, if not all, of the ills. Thus, not all the criticisms of peer review are valid and might be sorely lacking in evidence. Having said that, calls for improving the system are very much pertinent and timely. Although the most radical of the prescriptions would call for doing away with peer review altogether and supplanting it with a so-called “contextual review” to be done by journalists, public policy experts, etc., there have been numerous well-intentioned proposals to fine tune the process as we know it, primarily in order to make it more impervious to bias of all sorts.[11]

One of the reforms mooted the earliest was the open review system with various formats. However, surveys found that researchers are less likely to submit papers to journals with open review systems and are also less likely to agree to review papers for those journals.[15] Perhaps not so surprisingly, published reviews—a variant of the open review system—fared badly in more systematic studies. For example, a randomized experiment by the BMJ group found that 55% of reviewers declined to review if their report would be published with the article and that papers took longer to review if the reviews were intended to be published.[15] More importantly, these interventions had no effect on the quality of the reviewers' opinions. Those were neither better nor worse than the blinded reviews.[24] In fact, neither making the review process open nor making it double blind has any evidence of qualitatively improving the quality of review compared to the traditional single-blind review.[25] Still, the Expert Group of the European Commission, in its recommendations, calls for greater transparency of peer reviews including publication of signed reports.[4] Significantly, the likes of BioMed Central, PeerJ and F1000 Research have welcomed open peer review, whereas myriad journals, including Nature Communications, are trialing processes. And, importantly for scholarly publishing, Elsevier has just revealed plans to add optional open peer review to its fleet of 1800 journals by 2020.[26] The expectation is that the open system, and gradually the publication of the whole article history, including authors' responses, would foster accountability of peer review that has been called into question. In this vision, the scholarly record would not include just a version of record, rather a record of versions all the different kinds of contributions produced.[4]

Parallel to the move for open review has come the realization that recognition counts. That is the only way that the unquantifiably valuable work of the reviewers may be acknowledged, other than giving honoraria. Already, there are platforms that publish peer reviews that can be subsequently added to the reviewers' curricula vitae. Publons and F1000 Research are but only two, perhaps best-known examples, of such sites. There is strong indication that more and more journals will allow their reviewers to cite their reviews on these sites, if not to publish those. Already a few publications, including those from the EMBO Press and eLife, publish the peer reviews alongside the papers, and the clamor is growing demanding that journals produce evidence that peer review has actually been carried out with a certain degree of quality and thoroughness.[27]

One of the major criticisms of the peer-review process from researchers is the length of time it takes. There have been some attempts to help speed it up. In a novel approach called “portable peer review,” BMC Biology plans to provide rival publications with peer reviews of papers it rejects. The aim is to cut down on wasted time in the peer-review process by providing these reviews for reuse, for instance, when the manuscript did not fit the scope of the publication.[28]

In September 2016, open access journal BMC Psychology, revealed its launch of the first ever randomized controlled trial to determine if a “results-free” peer-review process could help to reduce the age-old academic research problem of publication bias. Put simply, results-free means reviewers do not see the results or discussions sections of submitted articles until the end of the review process. Such a peer-review process could ensure a piece of research is judged solely on the strength of its methods, not its outcome.[26]

Another evolutionary step has been the introduction of “author-mediated peer review” on the Wellcome Open Research publishing platform maintained by the Wellcome Trust. This is a variant of postpublication review, with the spin that the authors mediate the process themselves, leaving editors out of the business, literally! Authors submit their work to the platform, and after rapid quality checks and screening, including some integrity and ethics qualities, the author's research is published immediately. After publication, the author is incentivized to get their work peer reviewed (e.g., only work that is peer reviewed is then indexed in PubMed Central and Europe PubMed Central). They pick and invite the reviewers. If the author fails to get it peer reviewed (and positively peer reviewed), then it likely sits on the platform. The authors choose whether they address any points raised by the peer reviewers. Authors are totally in charge.[1]

Community-mediated peer review takes things one step further. Right now, researchers can post their manuscripts to “preprint servers” where they can create a permanent published, but not peer reviewed, record of their work. They might then choose to submit their work to a traditional journal, for peer-reviewed publication. An example is bioRxiv, the preprint server for biology. Reputable preprint servers do check preprints before publishing them (bioRvix says “all articles undergo a basic screening process for offensive and/or non-scientific content and for material that might pose a health or biosecurity risk and are checked for plagiarism”). They do not do peer review. But they may enable the communities of researchers that use preprint servers to decide themselves to assemble, to peer review and offer comments on preprints, and thus again after publication to take care of quality, including integrity and ethics.[1]

Concluding Remarks

The peer-review system is facing a lot of challenges these days. If trust deficit toward the system as a whole is one of the major bugbears, the gross mismatch between the burgeoning numbers of submissions and a stagnant or dwindling pool of quality reviewers, raising questions about the sustainability of the peer-reviewed publishing as a model, is another. Already there are some extremist calls to get rid of the flawed system altogether. That there are some flaws in the system is an unquestionable fact. Yet, evidence regarding the lack of efficacy of peer review is lacking. Peer review, since its beginning, has always been in a state of flux. The churning that we are witnessing currently may lead to greater transparency and further lessening of bias in the times to come. After all, peer review mimics democracy in that it is the worst form of appraisal except all the other forms that have been tried from time to time. Thus, like the latter, it is irreplaceable at the moment and in the foreseeable future.

| 1. |

Graf C. Cause and effect. Res Inf 2019. Available from: https://www.researchinformation.info. [Last accessed on 2019 Mar 11].

[Google Scholar]

|

| 2. |

Raman M. To reinvent peer review, we must reinvent how we pay peer-reviewers back. Wire 2018. Available from: https://www.thewire.in. [Last accessed on 2019 Mar 11].

[Google Scholar]

|

| 3. |

Wilkie T. Trends in scholarly publishing; transcript of London Book Fair presentation. Res Inf 2014. Available from: https://www.researchinformation.info. [Last accessed on 2019 Mar 11].

[Google Scholar]

|

| 4. |

European Commission. Future of Scholarly Publishing and Scholarly Communication: Report of the Expert Group to the European Commission. Luxembourg: Publications Office of the European Union; 2019.

[Google Scholar]

|

| 5. |

Merton RK. The Sociology of Science: Theoretical and Empirical Investigations. Chicago: University of Chicago Press; 1962.

[Google Scholar]

|

| 6. |

Budden AE, Tregenza T, Aarssen LW, Koricheva J, Leimu R, Lortie CJ. Double-blind review favours increased representation of female authors. Trends Ecol Evol 2008;23:4-6.

[Google Scholar]

|

| 7. |

Yankauer A. How blind is blind review? Am J Public Health 1991;81:843-5.

[Google Scholar]

|

| 8. |

Mark Ware Consulting. Publishing Research Consortium (PRC) Peer Review Survey; 2015. Available from: http://www.publishingresearchconsortium.com. [Last accessed on 2019 Mar 17].

[Google Scholar]

|

| 9. |

Smith R. Peer review: A flawed process at the heart of science and journals. J R Soc Med 2006;99:178-82.

[Google Scholar]

|

| 10. |

Rojas F. Modest proposal: triple blind review; 23 January, 2007. Available from: https://www.orgtheory.wordpress.com. [Last accessed on 2019 Mar 31].

[Google Scholar]

|

| 11. |

Fralick M, Sacks CA. Publicising trial results before peer review. BMJ 2019;364:l556.

[Google Scholar]

|

| 12. |

Mazer B, Mandrola JM. To maintain trust in science, lose the peer review. Medscape; 19 February, 2019. Available from: https://www.medscape.com. [Last accessed on 2019 Mar 18].

[Google Scholar]

|

| 13. |

Zuppello S. The latest Instagram influencer frontier? Medical promotions. The Goods by Vox; 15 February, 2019. Available from: https://www.vox.com. [Last accessed on 2019 Mar 26].

[Google Scholar]

|

| 14. |

Jefferson T, Rudin M, Brodney Folse S, Davidoff F. Editorial peer review for improving the quality of reports of biomedical studies. Cochrane Database Syst Rev 2007;MR000016.

[Google Scholar]

|

| 15. |

Harris S. Access, review and changing brains. Res Inf 2011. Available from: https://www.researchinformation.info. [Last accessed on 2019 Mar 19].

[Google Scholar]

|

| 16. |

Oransky I. Editor resigns following retraction for 'racist characterizations'. Medscape; 19 February, 2019. Available from: https://www.medscape.com. [Last accessed on 2019 Mar 21].

[Google Scholar]

|

| 17. |

Wong VS, Avalos LN, Callaham ML. Industry payments to physician journal editors. PLoS One 2019;14:e0211495.

[Google Scholar]

|

| 18. |

Peck L. Rogue peer review – A polysemy in the making. Res Inf 2019. Available from: https://www.researchinformation.info. [Last accessed on 2019 Mar 27].

[Google Scholar]

|

| 19. |

Oransky I. The case of the reviewer who said cite me or I won't recommend acceptance of your work. Retraction Watch; 7 February, 2019. Available from: https://www.retractionwatch.com. [Last accessed on 2019 Mar 27].

[Google Scholar]

|

| 20. |

Byrne J. We need to talk about systematic fraud. Nature 2019;566:9.

[Google Scholar]

|

| 21. |

Phillips N. Tool spots DNA errors in papers. Nature 2017; 551: 422-3.

[Google Scholar]

|

| 22. |

Brembs B. Prestigious science journals struggle to reach even average reliability. Front Hum Neurosci 2018; 12: 37.

[Google Scholar]

|

| 23. |

Types of Peer Review. Available from: https://www.authorservices.wiley.com. [Last accessed on 2019 Mar 31].

[Google Scholar]

|

| 24. |

van Rooyen S, Godlee F, Evans S, Black N, Smith R. Effect of open peer review on quality of reviews and on reviewers' recommendations: A randomised trial. BMJ 1999; 318: 23-7.

[Google Scholar]

|

| 25. |

Justice AC, Cho MK, Winker MA, Berlin JA, Rennie D, PEER investigators. Does masking author identity improve peer review quality? A randomized controlled trial. JAMA 1998; 280: 240-2.

[Google Scholar]

|

| 26. |

Pool R. Exposing peer review. Res Inf 2017. Available from: https://www.researchinformation.info. [Last accessed on 2019 Mar 30].

[Google Scholar]

|

| 27. |

Slavov N. We must demand evidence of peer review. Scientist 2018. Available from: https://www.the-scientist.com. [Last accessed on 2019 Mar 31].

[Google Scholar]

|

| 28. |

Wilke C. BMC Biology shares rejected papers' peer reviews with other journals. Scientist 2019. Available from: https://www.the-scientist.com. [Last accessed on 2019 Mar 30].

[Google Scholar]

|

Fulltext Views

3,304

PDF downloads

2,077