Translate this page into:

Validation of a computer based objective structured clinical examination in the assessment of undergraduate dermatology courses

2 Department of Family and Community Medicine, King Faisal University, Hofuf, Saudi Arabia

3 Department of Ophthalmology, King Faisal University, Hofuf, Saudi Arabia

Correspondence Address:

Feroze Kaliyadan

Department of Dermatology, College of Medicine, King Faisal University

Saudi Arabia

| How to cite this article: Kaliyadan F, Khan AS, Kuruvilla J, Feroze K. Validation of a computer based objective structured clinical examination in the assessment of undergraduate dermatology courses. Indian J Dermatol Venereol Leprol 2014;80:134-136 |

Abstract

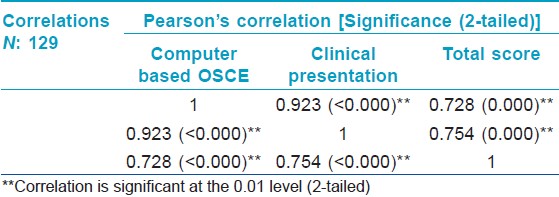

Many teaching centers have now adopted objective structured clinical examination (OSCE) as an assessment method for undergraduate dermatology courses. A modification of the standard OSCE in dermatology is computer based or electronic OSCE (eOSCE). We attempted to validate the use of a computer-based OSCE in dermatology in a group of fifth year medical students. The scores of the students in the computer-based OSCE showed a strong positive correlation with the scores on the clinical presentation (Pearson's co-efficient - 0.923, P value <0.000, significant at the 0.01 level) and a good correlation with overall scores of the student (Pearson's co-efficient - 0.728, P value <0.000, significant at the 0.01 level), indicating that this is a reliable method for assessment in dermatology. Generally, the students' feedback regarding the methods was positive.Introduction

Assessment of undergraduate dermatology courses in most institutes continues to rely on the examination and presentation of clinical ′short cases′ and ′long cases′. Unfortunately, these methods are affected by relatively low reliability because of factors like a lower sampling frame and lack of objectivity. Objective structured clinical examination (OSCE) would be a good solution to overcome this problem, but requires a lager faculty base and logistical support. [1] The main objectives of most undergraduate dermatology courses is the description of skin lesions and the diagnosis and treatment of common skin diseases. Computer-based images could be a good substitute to the actual patients for achieving these objectives and the objectivity of such an examination can be increased by conducting it in the form of a computerized OSCE. [2],[3] We have been conducting such a computer-based OSCE for the last two years in our institution. The questions are prepared by the faculty and have been modified after piloting on previous batches of students of a batch of 129 medical students of fifth year we aimed to correlate the scores obtained on a computer-based dermatology OSCE with scores in the clinical case presentation and the final scores that included the written assessment and professional behavior assessment. We also evaluated the students′ feedback regarding this mode of assessment. In general, this study mainly aimed to validate OSCEs as an assessment method in dermatology and the relative ease of administration of the same in the form of a computer-based OSCE.

Background

Dermatology training is a three week compulsory rotation conducted in the fifth year of the undergraduate course in our institution. The dermatology course has three credit hours and the final score is incorporated into the students′ grade point average (GPA). Traditional assessment in dermatology consisted of a written examination in the form of application based MCQs (40%), clinical case presentations (40%) and formative assessment (including professional behavior assessment with a log book) (20%). For the last two years, we have modified this assessment pattern. While retaining the percentages of the written exam and formative assessment, the clinical examination has been split to incorporate a computer-based OSCE in dermatology along with the case presentation.

Methods

One hundred and twenty nine medical students in the fifth year of their undergraduate course were assessed in this study using a computer-based OSCE. Each student was shown 16 images of common clinical cases and each image was followed by four questions. The questions commonly asked were about the description of skin lesions, diagnosis and differential diagnosis, investigation and treatment. Five minutes were allocated to each slide. The exam was conducted in small groups of not more than 10 students at a time, with two faculty supervisors. The images were displayed on a large screen using Microsoft PowerPoint® . The marks on the OSCE were statistically correlated with the marks obtained in the clinical case presentation and total marks, including the written examination. Student feedback was evaluated using a five-point Likert scale questionnaire along with open-ended questions. The Likert scale questionnaire had seven questions regarding the OSCE with a five-point rating (strongly agree, agree, undecided, disagree, strongly disagree). Statistical correlation between scores was done using the Pearson′s correlation coefficient. Consensus analysis of the student questionnaire was done based on percentage agreement and qualitative analysis of the open-ended questions.

Results

The scores of the students in the computer-based OSCE showed a strong positive correlation with scores on the clinical presentation (Pearson′s co-efficient −0.923, P value <0.000, significant at the 0.01 level) and a good correlation with overall scores (Pearson′s co-efficient −0.728, P value <0.000, significant at the 0.01 level), indicating that this is a reliable method for dermatology assessment [Table - 1].

The students′ feedback regarding the methods in general was positive. The questionnaire had a good reliability (Cronbach′s alpha −0.78). Of the total, 81 (62.7%) students agreed or strongly agreed that they would prefer this method to other traditional assessment methods in dermatology. Ninety nine (76.7%) students agreed or strongly agreed that the topics covered in OSCE were relevant and 81 (62.7%) agreed or strongly agreed that the quality of the OSCE slides was good. Disagreement was seen on the time allotted for each slide with 63 (48.8%) disagreeing or strongly disagreeing that the time per slide was sufficient. For the faculty, the method ensured that overall reliability was increased as a wider sampling of the dermatology content could be done and since each slide was attempted simultaneously by all students; the exam did not present any significant logistical difficulties either.

Discussion

Grover et al., [2] have previously documented the use of a computer assisted OSCE (CA-OSCE) for undergraduate dermatology assessment and their study showed good acceptance by the students. This study highlighted the importance of considering such a method in the situation of shortage of faculty in dermatology teaching institutes. However, it was not documented how well the OSCE correlates to overall performance of the student. [2] While the OSCE is definitely more objective compared to other more traditional methods like case presentations, equal importance should be given to other aspects related to reliability and validity. One of the most important factors which increases reliability would be to increase the number of slides. Ensuring a wider sampling covering all the ′must-know′ topics in undergraduate dermatology would also be essential to ensure that the assessment has good psychometric properties. [4] One of the disadvantages mentioned in the previous study by Grover et al., is the inability of the CA-OSCE to test psychomotor and affective domains. We suggest overcoming this disadvantage by adding at least one traditional station for each of these domains in the end: for example, demonstration of making a potassium hydroxide smear (for psychomotor domain) and counseling a simulated patient who acts as a parent of a child with a infantile hemangioma on the face (for affective domain). The supervising faculty themselves can act as the simulated patient. Moreover, the conduct of regular computer-assisted OSCE requires stringent quality controls to be effective including constant review of the stations based on peer and student feedback and effective standard setting methods to ensure that the final assessment score is fair and reliable.

Conclusion

Computer-assisted OSCE in dermatology is an effective, simple and reliable alternative to traditional methods of undergraduate dermatology assessment. It shows a good correlation with overall academic performance of the student. Further longitudinal studies are required to evaluate how best psychomotor and affective domain assessment can be incorporated into this format and to set the optimum time allotment for each slide. A hybrid OSCE combining a computer-based model with traditional stations might be an effective method for assessment of undergraduate dermatology courses.

| 1. |

Kaliyadan F. Undergraduate dermatology teaching in India: Need for change. Indian J Dermatol Venereol Leprol 2010;76:455-7.

[Google Scholar]

|

| 2. |

Grover C, Bhattacharya SN, Pandhi D, Singal A, Kumar P. Computer Assisted Objective Structured Clinical Examination: A useful tool for dermatology undergraduate assessment. Indian J Dermatol Venereol Leprol 2012;78:519.

[Google Scholar]

|

| 3. |

Kaliyadan F, Manoj J, Dharmaratnam AD, Sreekanth G. Self-learning digital modules in dermatology: A pilot study. J Eur Acad Dermatol Venereol 2010;24:655-60.

[Google Scholar]

|

| 4. |

Davies E, Burge S. Audit of dermatological content of U.K. undergraduate curricula. Br J Dermatol 2009;160:999-1005.

[Google Scholar]

|

Fulltext Views

2,599

PDF downloads

1,743