Translate this page into:

Computer Assisted Objective Structured Clinical Examination: A useful tool for dermatology undergraduate assessment

Correspondence Address:

Chander Grover

420-B, Pocket 2, Mayur Vihar, Phase-I, Delhi - 110 091

India

| How to cite this article: Grover C, Bhattacharya SN, Pandhi D, Singal A, Kumar P. Computer Assisted Objective Structured Clinical Examination: A useful tool for dermatology undergraduate assessment. Indian J Dermatol Venereol Leprol 2012;78:519 |

Abstract

Background: Dermatology is a minor subject in undergraduate medical curriculum in India. The dermatology clinical postings are generally poorly attended, and the clinical acumen of an average medical graduate in this specialty is quite low. Aims: To develop and implement Computer Assisted Objective Structured Clinical Examination (CA-OSCE) as a means of end of dermatology posting evaluation. Also, to assess its effectiveness in improving the motivation, attendance and learning of undergraduate students with respect to their visual recognition skills and problem solving ability. Methods: We designed and introduced CA-OSCE as a means of end of posting assessment. The average attendance and assessment scores of students undergoing CA-OSCE were compiled and compared using 'independent t test' with the scores of previous year's students who had undergone assessment with essay type questions. Results: The average attendance and average assessment scores for the candidates undergoing CA-OSCE were found to 83.36% and 77.47%, respectively as compared to 64.09% and 52.07%, respectively for previous years' students. The difference between the two groups was found to be statistically significant. Student acceptability of the technique was also high, and their subjective feedback was encouraging. Conclusion: CA-OSCE is a useful tool for assessment of dermatology undergraduates. It has the potential to drive them to attend regularly as well as to test their higher cognitive skills of analysis and problem solving.Introduction

Dermatology is one of the 19 subjects, constituting the undergraduate medical teaching curriculum in India. [1] However, it is one of the "not so important subjects" for undergraduates and policy makers alike. "More important subjects," carrying higher weightage in final exams, are devoted more time and effort. It is expected that by the end of dermatology posting, undergraduates should be skilled enough to recognize common dermatoses, recommend routine bedside tests like KOH examination, Nikolsky′s sign etc., and be capable of interpreting them. [1] The ground reality is, however, much different. It has been widely reported that the clinical competence of undergraduates in the field of dermatology is low. [2],[3],[4] Most students don′t see enough dermatology patients, [2] and rely more on theoretical knowledge about various skin diseases. An average medical graduate can′t even recognize many common skin diseases; leave alone considering valid differentials for them. The problem is compounded by a paucity of sufficient trained faculty in almost all institutions.

In our institution, the 6th semester undergraduate students undergo a 1 month posting in Department of Dermatology, which is followed by an end of posting assessment. This assessment is theoretical, based on Essay Type Questions (ETQ). Over the years, it has been observed that student attendance and motivation has been low with most students relying on dermatology books to pass this exam. It is well-known that "students learn what they are assessed for". As ′Assessment drives learning′, we decided to change our assessment system to test our students on patient-simulated "real problems" with an aim to motivate them to attend dermatology posting regularly. The objective was to motivate students to develop higher cognitive skills like analysis, synthesis and problem solving instead of only the lower cognitive domain of recall. To serve this aim, we made a modification of Computer Assisted Objective Structured Clinical Examination (CA-OSCE) with questions designed to test higher cognitive, problem solving skills.

The present study was aimed at assessing the impact of this improvised tool on dermatology undergraduate assessment scores. Its efficacy in improving the motivation of students and thereby improving their attendance during the clinical posting was also assessed objectively. The subjective feedback of students regarding this system was also assessed.

Methods

The study was carried out in the Department of Dermatology of a reputed tertiary level, teaching hospital in Delhi, over a period of 4 months (February to May), in 2010. The study group comprised of 6 th semester students attending their dermatology posting during this period and undergoing CA-OSCE at the end of their posting. An equal number of 6th semester students who had attended their posting in 2 previous years and taken ETQ based assessment were taken as controls. The study protocol was approved by the committee of medical education experts during the 64th National Teacher Training Course (NTTC) on Educational Science Technology attended by one of the authors.

Study group

The study group comprised of 6th semester students undergoing dermatology posting as per the rules and norms specified by the Medical Council of India (MCI). [1] They were informed beforehand that their end of posting assessment would be on the format of CA-OSCE, and they were prepared for this. The students on sick leave; not routinely attending posting due to supplementary exams; those with less than 50% attendance during the posting; and those who did not appear for their final assessment were excluded from the final analysis. The percentage of total classes attended as well as the marks scored in the final assessment was recorded.

Control group

An equal number of controls were retrospectively drawn from students of 6 th semester who attended their dermatology posting during the same months (February to May) during 2 previous years. This group had undergone ETQ-based assessment at the end of their posting. The exclusion criteria applied were the same as that of the study group.

Administering the CA-OSCE

The CA-OSCE was designed with the help of Microsoft PowerPoint® . It consisted of 10 questions with equal weightage assigned to each of them. A standard set of instructions were projected first. Each question detailed a clinical problem, starting with a clinical photograph, clinical details followed by a set of questions relating to identification of the disease; its causation; investigations recommended; their interpretation; disease associations; and management or prevention issues. The questions were structured, requiring either one word or short answers (SAQ′s), and the marks allotted were indicated clearly. Most of the questions were key feature questions (KFQ′s) which required the candidate to identify a key feature in the clinical problem, which determined the clinical approach to be followed for problem solving.

The photograph bank and the question bank for this exercise were developed within our department. Each exam covered all the must know areas avoiding any repetition at the same time. The exam was pre-reviewed by faculty members and pre-validated with residents. The slides were designed to move at a pre-determined, standard interval, and it was not possible to navigate back. This was designed to examine large groups at one go in a time-efficient manner.

The exam was conducted in departmental seminar room, which offers comfortable seating for 25 students. Invigilation was done by 2 faculty members who were free for supervision of students as there was an automated transfer of slides.

Data analysis

The attendance during the clinical posting and the assessment scores were meticulously recorded. The corresponding information about attendance and assessment scores for the control group was retrieved from departmental records. The average attendances as well as the average assessment scores for both the groups were then compared. Statistical analysis was done using SPSS version 16.

Subjective feedback was also collected from the study group participants about their perception about the new method of assessment they were subjected to.

Results

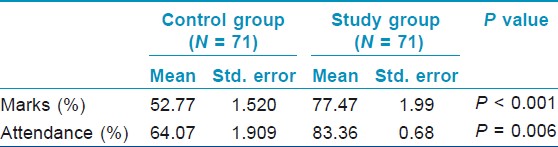

A total of 71 students were found to be eligible for inclusion in the study group. Their attendance scores and assessment scores were included for the final analysis. The students in this group attended 83.36 ± 0.68% of the classes on an average (range 65.2% - 96%). Their mean assessment scores were 77.47 ± 1.99% (range 30% - 100%). Attendance and assessment scores for an equal number of controls were retrieved from previous year′s records and were included for analysis. The average attendance in this group was 64.09 ± 1.09% (range 25% - 100%), and average assessment scores were 52.07 ± 1.52%. Thus, it can be seen that both attendance and assessment scores improved in the study group [Table - 1]. This could be a result of higher student motivation to attend their dermatology posting.

The data was statistically analyzed using SPSS. Homogeneity of variance between the groups (tested by Levene statistics) was not found to be significant. Hence, both the groups were compared using ′independent t test′. The difference between the 2 groups was found to be statistically significant.

Student′s subjective feedback was also encouraging. The format was reported to be interesting, challenging and stimulating by 91% of the candidates. About 77% candidates found it to be fair and equitable. However, a standardized time interval for each question was not welcomed by 34% of the study group participants.

Discussion

As is well-known, mode of assessment influences the learning style of students, [5] and medical students have been shown to be susceptible to this influence. [6] Hence, medical colleges are continually reviewing their assessment procedures, reporting newer regulations and developments [7],[8],[9] and seeking ways to change their assessment procedures. An ideal assessment technique is the one, which can test the acquisition and utilization of core cognitive levels beyond mere recall. [10]

OSCE was first developed in 1979 [11] and since then, their utility in various medical specialties has been extensively evaluated. These are multi-stationed clinical examinations, shown to be effective in testing the ability to integrate knowledge, skills and attitudes acquired during training. [12] This format has been reported to have high validity and reliability. [10] Over the years, many modifications of OSCE including GOSCE (group OSCE), [13] OSLER (objective structured long examination record), [14] OSVE (objective structured video examination) [15] have been developed and evaluated. OSCE is particularly valuable for dermatology assessments as it effectively tests the visual recognition skills of a dermatology student. At the same time, the traditional format of OSCE is reported to have many disadvantages. It can be quite time-consuming, labor intensive and requires extensive resources in order to be executed. [15] The exam can also prove to be chaotic as students in their nervousness may move to the wrong stations. [12] Thus, conducting a full-fledged OSCE for all undergraduates can be very difficult for dermatology departments as there is always a significant resource crunch. Hence, this current modification was worked upon.

Competency-based assessments can be difficult to design. Neuble et al., proposed essential steps to ensure the content validity of the designed assessment technique. [16] These were kept in mind while designing our CA-OSCE. As the first step, an examiner needs to identify the problems or conditions that a candidate should be able to assess and manage competently. For this, we focused on Must Know Areas in our dermatology undergraduate curriculum. The second step was to define the tasks within these problems or conditions. Hence, for each condition, we defined whether the student is required to just recognize the condition and refer, or he is required to know the nuances of treatment as well. The third step was to construct a blue-print for the assessment. We achieved this by defining the sample items to be included in the tests and creating a question bank.

From [Table - 1], it can be seen that the higher absenteeism rate from clinical sessions in the control group was successfully brought down with an introduction of CA-OSCE. The students realized that visual recognition of dermatological conditions is not possible without attending clinics. To face an improved assessment system, the students modified their old learning practices and started attending clinical sessions regularly. We did not introduce any new or enhance disciplinary measures, and our method of teaching and teaching schedule remained the same.

CA-OSCE brought down the subjectivity associated with the earlier means of assessment, leading to an improvement in the average scores in the study group. The fact that the students were attending more regularly also lead to an improvement in their knowledge of dermatology, which is reflected in their scores. The student′s positive subjective feedback revealed that CA-OSCE had high face validity as well.

The modification of the traditional OSCE to a CA-OSCE offered several advantages.

Ease of organization

Designing 18 - 20 stations for a batch of 18 - 20 students taking conventional OSCE can be a herculean task. [12] Also, arranging a large number of patients with typical diseases every month can be very difficult. Johnson et al., described the use of standardized patients for conducting OSCE. [17] This is obviously not possible for dermatology exams as there will be no dermatoses to be shown. The format of CA-OSCE successfully overcomes this problem as characteristic dermatoses can be photographed in daily OPD′s and a photo bank maintained, which can then be utilized for exam purposes.

Ease of administration

The total time to administer CA-OSCE was much less as time required for student transition between stations was saved. All the students received an identical set of instructions, clinical photographs and information and standard time frame to respond to questions. Any clarification sought by any one student was given to the whole group simultaneously. Hence, the reliability of CA-OSCE was high. CA-OSCE also required less space and less number of invigilators.

Ease of evaluation

The format being objective and structured with the allotted marks being clearly indicated, an evaluation was a lot easier. It was also very just and fair towards the students as the subjectivity was very much reduced.

Weakness of the study

We encountered certain disadvantages as well. CA-OSCE can test the cognitive domain effectively; however, evaluation of affective and psychomotor domains with this format is not easy. The approach and attitude of an examinee towards a real patient, his method of examination, or how the examinee carries himself cannot be assessed with the CA-OSCE. Similarly, the skill of conducting routine bedside procedures also can not be assessed. The policy of giving a standard time to all questions through automated slide transfer creates problems. Some questions may require shorter answers, or some students may be faster than others. This prompts disturbances in the group. The examinee is not allowed to go back and revise his observations or responses though he may have changes in his opinion later in the course of the problem. It could also be argued that the higher attendance scores in the study group as compared to the control group could entirely be because of pre-informed awareness of a novel mode of assessment, causing curiosity in examinees. Further studies with larger student groups and prospective controls are needed to throw more light on this issue.

There is an acute shortage of faculty in most of our teaching institutions, and it is likely to worsen in the future. [18],[19] Despite this, it is imperative that quality instruction and training be provided to the undergraduates. Modification of OSCE to a computer-based format (CA-OSCE) appears to be an effective and innovative means of doing so.

Conclusion

CA-OSCE is an improved technique for dermatology assessment, which stresses on visual recognition of disease and effectively evaluates the higher cognitive skills of learners. At the same time, it is less resource intensive and saves time, space and labor, bringing down the requirement of human and material resources. However, it is not an effective test of the interpersonal and psychomotor skills of the learners. Overall, it focuses and reinforces the aspects of dermatological clinical competence, which are necessary for clinical practice. However, further controlled studies with larger number of students are needed to help resolve this issue.

| 1. |

Medical Council of India. Salient Features of Regulations on Graduate Medical Education, 1997. Published In Part III, Section 4 of the Gazette of India Dated 17th May 1997. Available from: http://www.mciindia.org/know/rules/rules_mbbs.html. [Last accessed on 2011 Nov 14].

[Google Scholar]

|

| 2. |

Kaliyadan F. Undergraduate dermatology teaching in India: Need for change. Indian J Dermatol Venereol Leprol 2010;76:455-7.

[Google Scholar]

|

| 3. |

Davies E, Burge S. Audit of dermatological content of U.K. undergraduate curricula. Br J Dermatol 2009;160:999-1005.

[Google Scholar]

|

| 4. |

McCleskey PE, Gilston RT, DeVillez RL. Medical student core curriculum in dermatology survey. J Am Acad Dermatol 2009;61:30-5.

[Google Scholar]

|

| 5. |

Entwistle N, Entwistle A. Contracting forms of understanding for degree examination: The student's experience and its implications. High Educ 1991;222:205-27.

[Google Scholar]

|

| 6. |

Newble DI, Jaeger K. The effect of assessments and examinations on the learning of medical students. Med Educ 1983;17:165-71.

[Google Scholar]

|

| 7. |

Barrows HA, Williams RG, Moy RH. A comprehensive performance based assessment of fourth year student's clinical skills. J Med Educ 1987;62:805-9.

[Google Scholar]

|

| 8. |

Van der Vleuten CP, Swanson DB. Assessment of clinical skills with standardized patients: State of the art. Teach Learn Med 1990;2:58-76.

[Google Scholar]

|

| 9. |

Vu NV, Barrows HS. Use of standardized patients in clinical assessments: Recent developments and measurement findings. Educ Res 1994;23:23-30.

[Google Scholar]

|

| 10. |

Fowell SL, Bligh JG. Recent developments in assessing medical students. Postgrad Med J 1998;74:18-24.

[Google Scholar]

|

| 11. |

Harden RM, Gleeson FA. Assessment of clinical competence using and objective structured clinical examination (OSCE). Med Educ 1979;13:41-54.

[Google Scholar]

|

| 12. |

Holyfield JL, Bolin KA, Rankin KV, Shulman JD, Jones DL, Eden BE. Use of computer technology to modify objective structured clinical examinations. J Dent Educ 2005; 69:1133-6.

[Google Scholar]

|

| 13. |

Biran LA. Self assessment and learning through GOSCE (Group OSCE). Med Educ 1991;25:475-9.

[Google Scholar]

|

| 14. |

Cookson J, Crossley J, Fagan G, McKendree J, Mohsen A. A final clinical examination using a sequential design to improve cost-effectiveness. Med Educ 2011;45:741-7.

[Google Scholar]

|

| 15. |

Zartman R, McWhorter A, Seale N, Boone W. Using OSCE-based evaluation: Curricular impact over time. J Dent Educ 2002;66:1323-30.

[Google Scholar]

|

| 16. |

Newble D. Techniques for measuring clinical competence: Objective structured clinical examinations. Med Educ 2004;38:99-203.

[Google Scholar]

|

| 17. |

Johnson J, Kopp K, Williams R. Standardized patients for the assessment of dental students' clinical skills. J Dent Educ 1990;54:331-3.

[Google Scholar]

|

| 18. |

Wu JJ, Davis KF, Ramirez CC, Alonso CA, Berman B, Tyring SK. Graduates-of-foreign-dermatology residencies and military dermatology residencies and women in academic dermatology. Dermatol Online J 2009;15:2.

[Google Scholar]

|

| 19. |

Kaliyadan F, Manoj J, Dharmaratnam AD, Sreekanth G. Self-learning digital modules in dermatology: A pilot study. J Eur Acad Dermatol Venereol 2010;24:655-60.

[Google Scholar]

|

Fulltext Views

2,652

PDF downloads

2,268