Translate this page into:

Construction and validation of an audio-visual tool to instruct caregivers of patients with pemphigus in photographing skin and oral cavity lesions during tele-consultation: A cross-sectional study

Corresponding author: Dr. Dipankar De, Department of Dermatology, Venereology and Leprology, Postgraduate Institute of Medical Education and Research, Chandigarh, India. dr_dipankar_de@yahoo.in

-

Received: ,

Accepted: ,

How to cite this article: Prarthana T, Handa S, Goel S, Mahajan R, De D. Construction and validation of an audio-visual tool to instruct caregivers of patients with pemphigus in photographing skin and oral cavity lesions during tele-consultation: A cross-sectional study. Indian J Dermatol Venereol Leprol. 2025;91:76-80. doi: 10.25259/IJDVL_361_2023

Abstract

Background

Owing to the lack of standardised audio-visual (A-V) instructions to take photographs, patients with pemphigus faced difficulties during tele-consultation in COVID-19 pandemic.

Objective

To construct and validate an A-V instruction tool to take photographs of skin and oral cavity lesions of pemphigus.

Methods

In this observational study, we included patients with pemphigus of either gender seeking tele-consultation, aged 18 years or older. A-V instructions demonstrating skin and oral cavity photography were designed and shared with the patients. They were requested to send pictures of lesions that complied with the instructions. They were then required to complete a 10-item questionnaire for face validation in the two following rounds. The videos were content validated by 14 experts in the field of clinical dermatology.

Results

Forty-eight patients took part in face validation. A majority of patients, 47 (97.9%) and 45 (93.8%); rated the audio and video quality as being above average, respectively. Forty-seven patients (97.9%) said the instructional videos were useful, and 42 patients (87.5%) said they did not need to take any further images to show how severe their disease was. The average scale content validity index for the instructions on skin imaging and the oral cavity imaging during round 1 of content validation was 0.863 and 0.836, respectively.

Limitation

Validated instruction videos are in Hindi language and need to be further translated and validated in other local languages for use in non-Hindi speaking regions.

Conclusion

A-V instructions were useful to take photographs during tele-consultation.

Keywords

teleconsult

tele-consultation

pemphigus

photography

instruction video

Introduction

Pemphigus is an autoimmune blistering disease in which patients present with oral and/or skin lesions in the form of vesicles, erosions and crusted/healed lesions. COVID-19 pandemic hampered direct physical examination and regular follow-up. Teleconsultation services through commonly used platforms like WhatsApp alleviated such inconveniences to some extent. While availing teleconsultation services, patients faced technical problems at various levels that included amongst others, the inability to capture good-quality pictures and transmit them to the physician. Moreover, poor quality or non-representative pictures may not help the physician in disease assessment. Hence, we intended to construct and validate an audio-visual instruction tool (A-V tool) helpful in photographing skin and oral cavity lesions by the caregivers.

Methods

The study was performed after approval by the Institutional Ethics Committee (INT/IEC/2021/SPL.866, dated 25/05/21). The study was done in two phases. A-V tools were constructed in the first phase, followed by validation in the second phase. Supplement figure 1 depicts the steps followed for construction and validation.

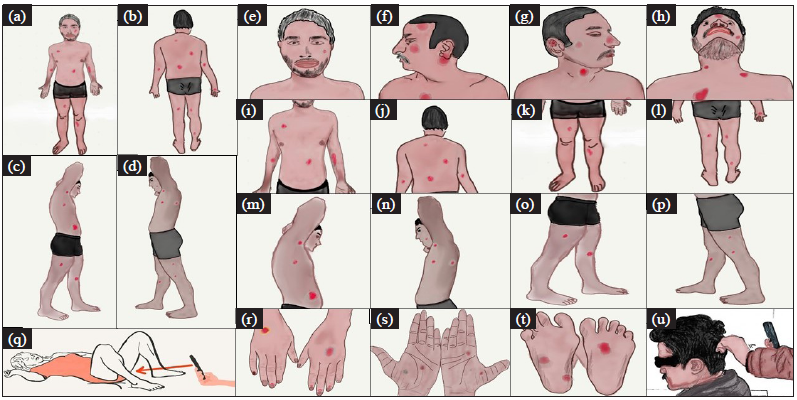

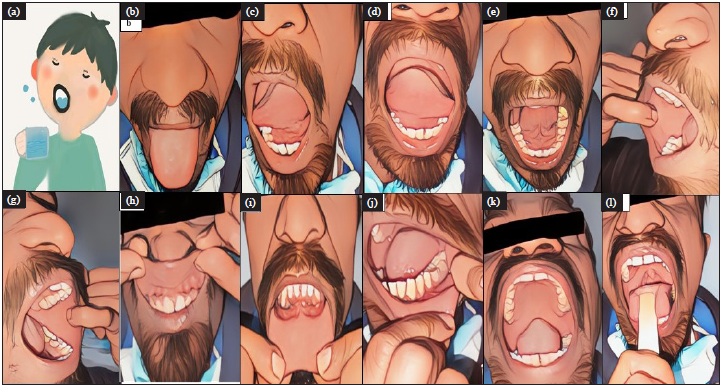

The final videos instructing photography of skin and oral cavity lesions were created in storyboard format in Hindi. [Videos 1 and 2] The A-V tools were subsequently created with instructions dubbed in English [Videos 3 and 4] for the understanding of the editors, reviewers and potential users of the A-V tools. The images used for illustrating different positions for photographing skin lesions are demonstrated in Figure 1[a–u]. Images for oral cavity lesion photography illustrations are presented in Figure 2[a–l].

- Positions of the patient for skin lesion photography. Overview imaging: (1a) Patient standing straight with both hands by side of the body with palms facing forwards, front view and (1b) back view. (1c) Patient standing with arms raised and left leg forward capturing right side of body and (1d) right leg forward capturing left side of body. (1e) Close up images: front profile, (1f) left profile, (1g) right profile, (1h) extended neck, (1i) chest and abdomen, (1j) upper and lower back, (1k) lower body front and (1l) back view, (1m) upper body right and (1n) left lateral view, (1o) lower body depicting inner side of left lower limb with outer side of right lower limb, (1p) inner side of right lower limb with outer side of left lower limb, (1q) genital and perineal area (red arrow), (1r) dorsa of hands, (1s) palms, (1t) soles, (1u) scalp.

- Images used in oral cavity photography instruction videos demonstrating (2a) rinsing mouth, (2b) upper surface of tongue, (2c) left lateral border of tongue, (2d) right lateral border of tongue, (2e) ventral surface of tongue and floor of mouth, (2f) right buccal mucosa, (2g) left buccal mucosa, (2h) upper lip and upper gingival mucosa, (2i) lower lip and lower gingival mucosa, (2j) left lower lateral gingivo buccal sulcus, the same way can be followed for right lower lateral gingivo buccal sulcus, (2k) hard and soft palate, (2l) posterior wall of pharynx.

For the face validation of the A-V tools, a sample size of 40 was calculated by considering the items in the questionnaire to be 10 and the subject-to-item ratio to be 4. This is used in most of the previous studies, though there is no consensus on this.1 A questionnaire containing 10 items was drafted. This was aimed at assessing the comprehensibility and clarity of the A-V tools, ease of taking photos and usefulness of the tools from the caregivers’ perspective [Supplement-Annexure 1]. Items meeting an agreement of 80% or more were considered validated.

Fifteen experts in the field of clinical dermatology who are well versed in the Hindi language were contacted via email for content validation. [Supplement-Annexure 2] The questionnaire evaluated if the videos were sufficient for educating caregivers on capturing all the necessary areas with clarity, as needed for evaluating Pemphigus Disease Area Index (PDAI), Autoimmune Bullous Skin Disorder Intensity Score (ABSIS), Oral Disease Severity Score (ODSS), and Pemphigus Oral Lesions Intensity Score (POLIS), that are widely used severity assessment scoring systems for pemphigus.2–5 A total of 14 responses were received in Round 1. In order to perform content validation, the Content Validation Index (CVI) was used in two ways: Experts’ agreement on each item with the I-CVI (Item-level Content Validity Index) and proportion of items in which there was agreement of each expert with the S-CVI/AVE (Scale-level Content Validity Index, Average Calculation Method). An instrument with an I-CVIs of 0.78 or higher and an S-CVI/AVE of 0.90 or higher is considered to have excellent validity.6 Based on the responses in Round 1, the tools were modified and were sent to the same 14 experts along with the same questionnaire for Round 2 of content validation.

Results

Face validation of A-V tools

All 48 patients participating in the face validation study had smartphones with mobile cameras and access to the Internet and WhatsApp. Of them, 27 (56.3%) were male and 21 (43.7%) were females. The mean (±standard deviation) age of the participants was 44.3 (± 15.1) years. The demographic details of the patients are presented in Table 1.

| Variables | Values |

|---|---|

| Age (mean ± SD), in years | 44.3 ± 15.1 |

| Males (n, %) | 27 (56.2) |

| Females (n, %) | 21 (43.8) |

|

Education status of patient Graduate and above Higher secondary Secondary Primary No formal education |

n (%) 11 (22.9) 4 (8.3) 19 (39.6) 7 (14.6) 7 (14.6) |

|

Education status of the caregiver Graduate and above Higher secondary Secondary Primary No formal education |

n (%) 24 (50.0) 9 (18.8) 8 (16.7) 4 (8.3) 3 (6.2) |

|

Number of caregivers required to take photographs One caregiver Two caregivers |

n (%) 38 (79.2) 10 (20.8) |

SD: Standard deviation

Forty-seven (97.9%) and 45 (93.8%) patients rated audio and video quality as average and above, respectively. Forty-three patients (89.6%) reported that they did not need any clarification of instructions. Two patients needed more clarification when taking photographs of the oral cavity. The other three did not communicate the clarification required.

Forty-six (95.8%) caregivers did not find any difficulty in taking photographs according to the instructions. One patient specified that erosions of the mouth could not be represented in photographs properly, while another patient did not specify the difficulty faced. Forty-seven (97.9%) patients reported that the instruction videos were helpful. Forty-two (87.5%) patients reported that there was no need to take extra photos apart from those demonstrated in videos to completely represent their lesions and disease severity. Six (12.5%) patients had to take extra photos. Extra photos were required for the head, knee, arm, mouth, private parts and gums. Eleven (22.9%) patients reported that they could not capture images of the back of their mouth, inner part of mouth and head, even on repeated attempts. The details of patient agreement on face validation are presented in Table 2.

| Items | Patient agreement n (%) |

|---|---|

| Comprehensibility and clarity | |

| Completely understandable | 43 (83.6) |

| Audio quality average and above | 47 (97.9) |

| Video quality average and above | 45 (93.8) |

| More clarification not required | 43 (89.6) |

| Ease of taking photos | |

|

Did not face difficulty in downloading video or uploading images |

47 (97.9) |

| Did not face difficulty in taking picture of any site | 46 (95.8) |

| There was no need for capturing extra photos | 42 (87.5) |

| Lesion over the genital area (n = 12) | |

|

Hesitant to send photos Not hesitant to send photos |

6 (12.5) 6 (12.5) |

| Usefulness | |

| Video helped in demonstrating lesions to doctor | 47 (97.9) |

| Time required to understand the video is less than 10 minutes | 46 (95.8) |

| Time required to take photographs and transmit is less than 10 minutes | 35 (72.9) |

| Video helped in saving time from visiting physically | 40 (83.3) |

| Prefer to use telecommunication for routine consultation in future | 17 (35.4) |

Content validation of video instructions

The responses for content validation were assessed by the content validity index (CVI). Thirteen (92.8%) experts agreed that the content is sufficient to cover all sites required for mucosal PDAI and ABSIS scoring. Ten (71.4%) and seven (50.0%) experts agreed that the content is sufficient to score ODSS and POLIS, respectively. Eleven (78.5%) experts agreed that the instructions covered all the sites for cutaneous PDAI, while three experts did not agree. Of them, one expert disagreed as the nose was not covered in the instruction video. Another point of disagreement was that the demonstration should be done on an undressed person. Another expert recommended the inclusion of sites like axilla, upperinner thighs, palms, and soles and nails separately. The details of the expert agreement on content validation are given in Table 3. The images included in construction of instruction videos demonstrating photography of skin and oral cavity lesions are depicted in Figure 1[a-u] and Figure 2[a-l], respectively.

| Items | Expert agreement | |

|---|---|---|

| n (%) | CVI | |

| Structure and presentation of video (oral cavity) | ||

|

Storyboard format is appropriate Sequence of depiction is appropriate Quality of audio clear and understandable Quality of video average and above No grammatical errors No audio-visual lag in the video |

14 (100) 13 (92.8) 14 (100) 14 (100) 14 (100) 14 (100) |

1 0.92 1 1 1 1 |

| Objectives (Oral cavity) | ||

|

All the sites for PDAI scoring included All the sites for ABSIS scoring included All the sites for ODSS scoring included All the sites for POLIS scoring included |

13 (92.8) 13 (92.8) 10 (71.4) 07 (50.0) |

0.92 0.92 0.71 0.50 |

| Structure and presentation of video (skin lesion) | ||

|

Storyboard format is appropriate Sequence of depiction is appropriate Quality of audio is clear and understandable Quality of video good or excellent No grammatical errors No audio-visual lag in the video |

14 (100) 13 (92.8) 14 (100) 14 (100) 14 (100) 13 (92.8) |

1 0.92 1 1 1 0.92 |

| Objectives (Skin lesions) | ||

|

All the required sites for PDAI included All the sites for ABSIS included |

11 (78.5) 13 (92.8) |

0.78 0.92 |

CVI, content validity index; PDAI, pemphigus disease area index; ABSIS, autoimmune bullous skin disorder intensity score; ODSS, oral disease severity score; POLIS, pemphigus oral lesions intensity score

The average scale content validity index (S-CVI/AVE) for instructions on oral cavity and skin imaging videos was 0.863 and 0.836, respectively. Five of the 10 items in the oral cavity imaging questionnaire and 4 of 8 items in the skin lesion imaging questionnaire showed universal agreement. The universal agreement on scale content validity index was 0.50 for both oral and skin lesions imaging videos. All the suggestions on improving the A-V tools received in Round 1 of content validation were included and videos were modified. These were sent to the same 14 experts who responded in Round 1. 13 experts responded in Round 2. There was universal agreement for all the components of the questionnaire at the end of Round 2. The final version of the original A-V tools for photographing oral cavity and skin lesions are of 2 min 39 s and 3 min and 39 s duration, respectively.

Discussion

Tele-dermatology (TD) is to avail dermatology services from a distance. It can be synchronous, asynchronous or hybrid of these two. Hybrid consultation is more appropriate for complex problems like autoimmune bullous diseases and connective tissue disorders.7 Among other problems, the patient may have trouble taking pictures during TD. Two A-V tools were created and validated to circumvent this problem.

The general instructions regarding the scene’s background, ambient lighting, the patient’s position, all have a significant impact on the photograph quality. A non-reflective, neutral grey drape or sheet would be the ideal background for clinical photography, according to Jakowenko et al.8 Photographs should ideally be captured in natural light, that creates even lighting. Most smartphone cameras include an integrated flash that can be used to take pictures of lesions in the oral cavity and in inadequate lighting conditions.8 According to Witmer et al., total body photography needs a minimum of 25–30 photographs to cover the entire body with different positioning and posing of the subject.9 For the convenience, caregivers were instructed to take 4 images of each of the front, back and 2 sides at double arm distance from the subject to give an overview of the distribution of lesions. Additional close-up pictures of the trunk and limbs in various body positions were taken.

For the oral severity scores, the majority of the experts concurred that the tool had all the elements necessary for mucosal PDAI and oral ABSIS, although 4 experts had disagreement regarding the ODSS. Difficulty in assessing erythema, in photographing gingivo-buccal sulcus and lateral portions of the gingiva, and the pain component of the score necessitating extra assessment were the reasons for disagreement. However, the tool’s final version was more focused on capturing lesions on the lateral gingivobuccal sulcus. The least agreement among the experts was for POLIS scoring because of the difficulty in measuring depth of the lesions.

Eleven experts agreed that the information was adequate to score the scalp and cutaneous components of the PDAI. One of the three experts who disagreed said that the tool did not cover the nose. That the demonstration should be performed on an undressed person was another disagreement. In order to make the video aesthetically pleasing, cartoonish images and drawings were also used in the A-V tool’s English version. Some experts advised including areas like axillae, upper inner thighs, palms, soles and nails. The English version of the A-V tools clearly illustrates them. The final version of the tool includes instructions for capturing pictures of the palms, soles, and genital region, including the perineum.

I-CVI of 0.79 and above is considered to be significant.10 Thus, all the items met these criteria except content required to score ODSS, POLIS and cutaneous PDAI at the end of the first round of content validation. S-CVI/AVE of both oral cavity and skin lesions photographing tools was more than 0.75 indicating good content validity overall.

Limitation

The validated videos are in Hindi language, making it acceptable only in Hindi speaking regions. These videos need to be further translated and validated in local languages in non-Hindi speaking regions within as well as outside India for usage.

Conclusion

A-V tools are useful for laypersons in photographing oral cavity and skin lesions, ensuring comprehensive coverage of all body areas necessary for severity assessment during teleconsultations.

Acknowledgment

We extend our thanks to experts, including Dr. Bhabani Singh, Dr. Brijesh Nair, Dr. Deepika Pandhi, Dr. Divya Gupta, Dr. Feroze Kaliyadan, Dr. Geeti Khullar, Dr. Lalit Gupta, Dr. Mahendra Kura, Dr. Nilay Kanti Das, Dr. Shekhar Neema, Dr. Shyamanta Barua, Dr. Sudip Ghosh, Dr. Vijay Zawar, and Dr. Yasmeen J Bhat for their participation in the content validation of the instructional video used in this study. We also thank all the patients and caregivers who took part in face validation of the instruction videos. Special thanks to Dr. Shrimanth YS and Dr. Vinod Kumar Hanumanthu for their contributions to video creation/editing and Dr. Smriti Gupta for providing the Hindi voiceover, and a heartfelt acknowledgment to Mr. Anil, the hospital attendant, for his role as a demonstrator in the instructional video.

Ethical approval

The study was performed after the approval by the Institutional Ethics Committee vide letter number INT/IEC/2021/SPL.866, dated 25/05/21.

Declaration of patient consent

The authors certify that they have obtained all appropriate patient consent.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

Use of artificial intelligence (AI)-assisted technology for manuscript preparation

The authors confirm that there was no use of artificial intelligence (AI)-assisted technology for assisting in the writing or editing of the manuscript and no images were manipulated using AI.

References

- Sample size used to validate a scale: A review of publications on newly-developed patient reported outcomes measures. Health Qual Life Outcomes. 2014;12:176.

- [CrossRef] [PubMed] [PubMed Central] [Google Scholar]

- Pemphigus disease activity measurements: Pemphigus disease area index, autoimmune bullous disorder intensity score, and pemphigus vulgaris activity score. JAMA Dermatol. 2014;150:266-72.

- [CrossRef] [PubMed] [Google Scholar]

- Large international validation of ABSIS and PDAI pemphigus severity scores. J Invest Dermatol. 2019;139:31-7.

- [CrossRef] [PubMed] [Google Scholar]

- Validation of an oral disease severity score (ODSS) tool for use in oral mucous membrane pemphigoid. Br J Dermatol. 2020;183:78-85.

- [CrossRef] [PubMed] [Google Scholar]

- Pemphigus oral lesions intensity score (POLIS): A novel scoring system for assessment of severity of oral lesions in pemphigus vulgaris. Front Med. 2020;7:449.

- [CrossRef] [PubMed] [Google Scholar]

- [Content validity index in scale development] Zhong Nan Da Xue Xue Bao Yi Xue Ban. 2012;37:152-5.

- [CrossRef] [PubMed] [Google Scholar]

- Teledermatology in the COVID-19 pandemic: A systematic review. JAAD Int. 2021;5:54-64.

- [CrossRef] [PubMed] [PubMed Central] [Google Scholar]

- Clinical photography in the dermatology practice. Semin Cutan Med Surg. 2012;31:191-9.

- [CrossRef] [PubMed] [Google Scholar]

- Design and implementation content validity study: Development of an instrument for measuring patient-centered communication. J Caring Sci. 2015;4:165-78.

- [CrossRef] [PubMed] [PubMed Central] [Google Scholar]